Preface :

Let me tell you about my journey into virtual reality. Back in 2013, I was asked to work on the development of a virtual reality experience for an indoor attraction park. It was an exciting time, VR was really new, the Oculus DK1 was just announced on Kickstarter, and the world seemed to be moving in a virtual direction. But there were a lot of technical challenges to overcome. The Oculus DK1 hadn’t been released yet when we started, and a room-scale VR solution hadn’t even been invented! , So we contacted all early HMD producers (Sensics, VReality, etc) and looked for tracking solutions using Kinect 2, Vicon or even suits.

Despite the many obstacles we would be facing, we put in a lot of hard work and research, trying out dozens of different hardware and software solutions.

After half a year of trying and experimenting with building apps for the Oculus DK1 and different tracking methods, we had settled on a solution. We ended up building a 24 high-speed camera fully markerless motion-tracking system that tracked up to 4 people. We connected it to a computer running a Unity VR scene over the network and had a modified DK1 with a laptop in a backpack to create 6 DOF room-scale VR. Not having any controllers, everything was just done by moving the body. We had virtual lava fields to cross, bubbles to pop, and hallways to pass.

It was a huge achievement, but unfortunately, the project had to end after two years as the VR equipment, hardware & software, and operating issues just weren’t up to par at the time for the targeted use case: one of the first VR-based theme park attractions. But although the project pivoted in another direction, the experience I gained from this project was invaluable. I learned about markerless motion capture, the Unity3D game engine, and the endless possibilities of virtual reality. It was only a matter of time before I started exploring opportunities to create my own VR projects.

Something I always wanted and a proof of concept.

In 2014/2015, after building some stuff on the DK1 & DK2 in Unity3D, for the project I was working on, I took the plunge and decided to create something that could invoke creativity and something I always wanted myself – a Virtual Reality Recording Studio!

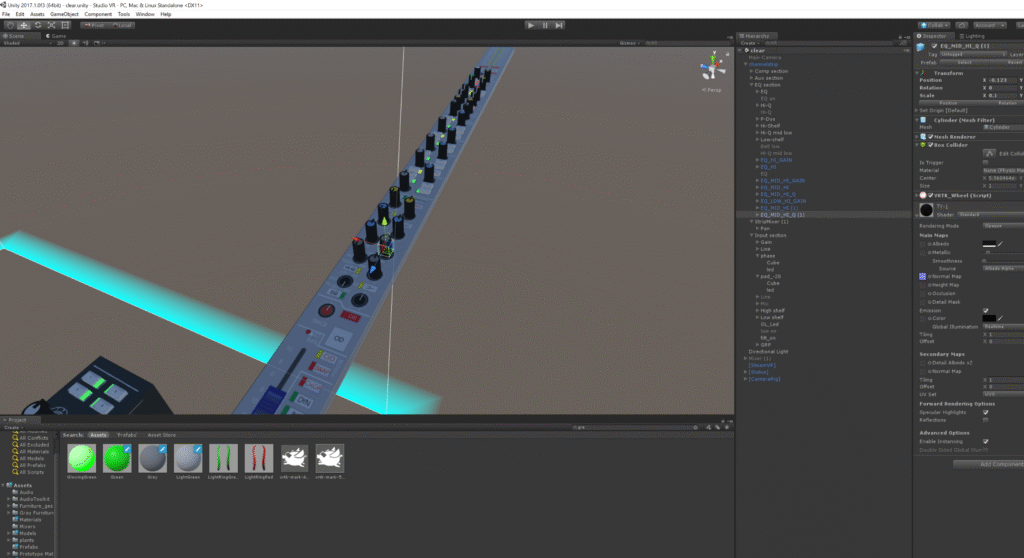

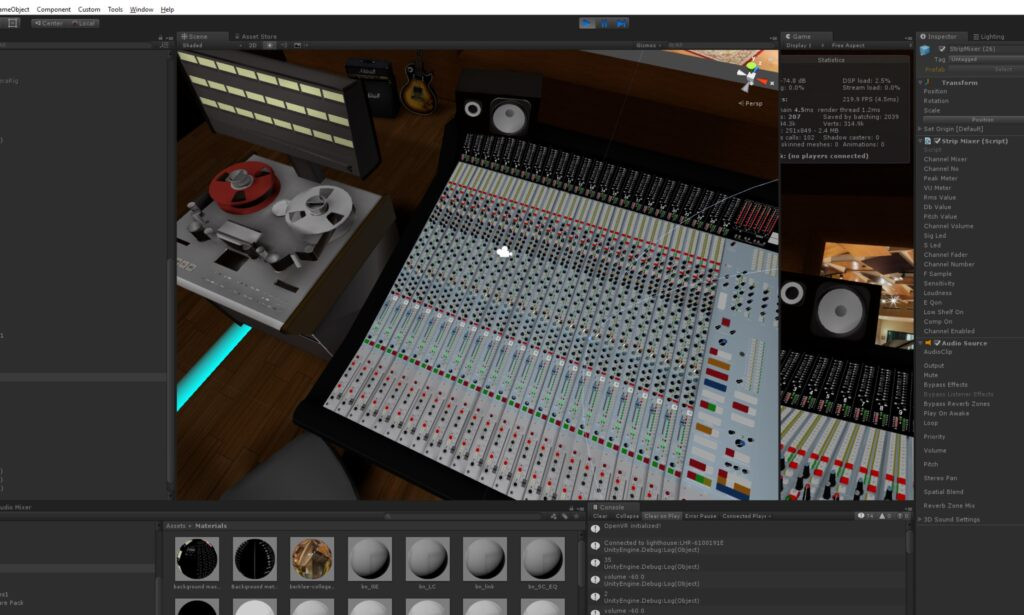

The first step was to do research and see if it was possible to create a working mixing desk in a game engine. First I started building and I ran some very successful tests. Some was having some sliders moving around, others controlling volume while playing multiple tracks and I even made a quick test to be able to change some EQ settings during playback. As this all worked like a charm, I continued on. I spent time improving my skills in Unity3d, C# programming and 3D modeling in Blender in my free time, while working on other projects for various clients during business hours.

After some months of learning and trying, I was finally ready to take on this new VR project and continue to push the boundaries of what’s possible in this amazing technology

I decided to take my project to the next level and started working on a full-scale prototype. But before I could begin, I had to decide what equipment to replicate in my virtual reality recording studio. I wanted the centrepiece to be the console, so I scoured the internet for inspiration. Initially, I had my heart set on building a Focusrite console, because of the documentary The Story of the Focusrite Studio Console, but I couldn’t find enough reference material and photos to work off.

So, I turned my attention to another legendary console from another documentary (Sound City) – the legendary Neve console. However, the Neve consoles had a lot of variations, and I didn’t find enough documentation online to build them.

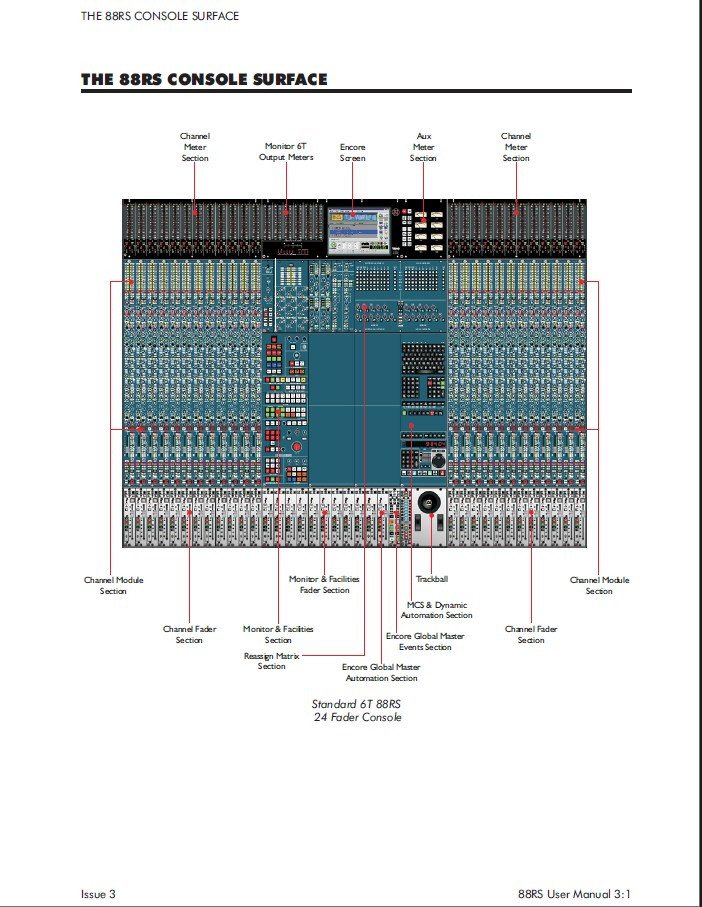

But I did manage to find a manual for the AMS Neve series. First, I found a manual for the most advanced 88RS, which explains the console color of my first tests, but later I came across the VR Legend, and that was it! It was already in the name that this particular console was destined to be the first VR console.

Building a proof of concept to convince myself.

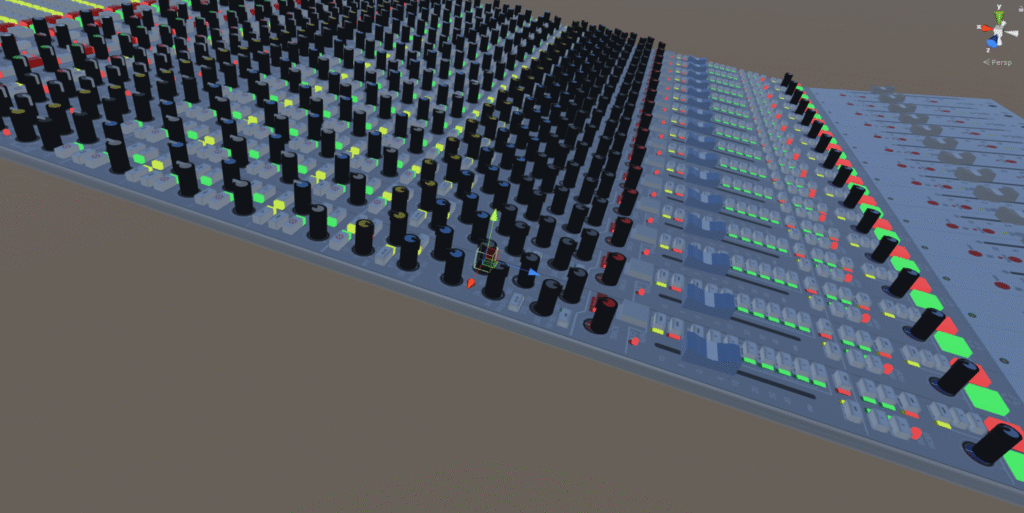

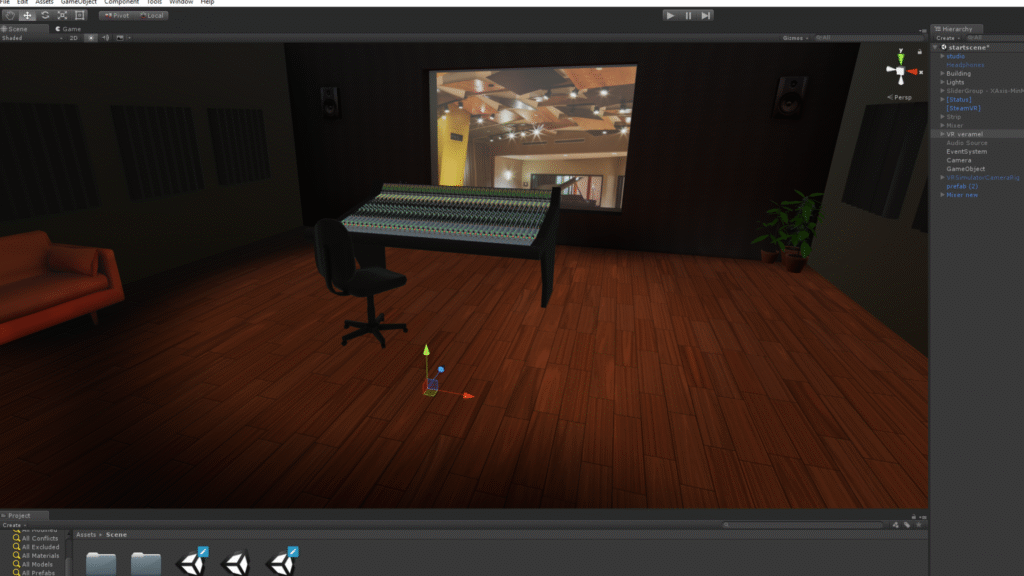

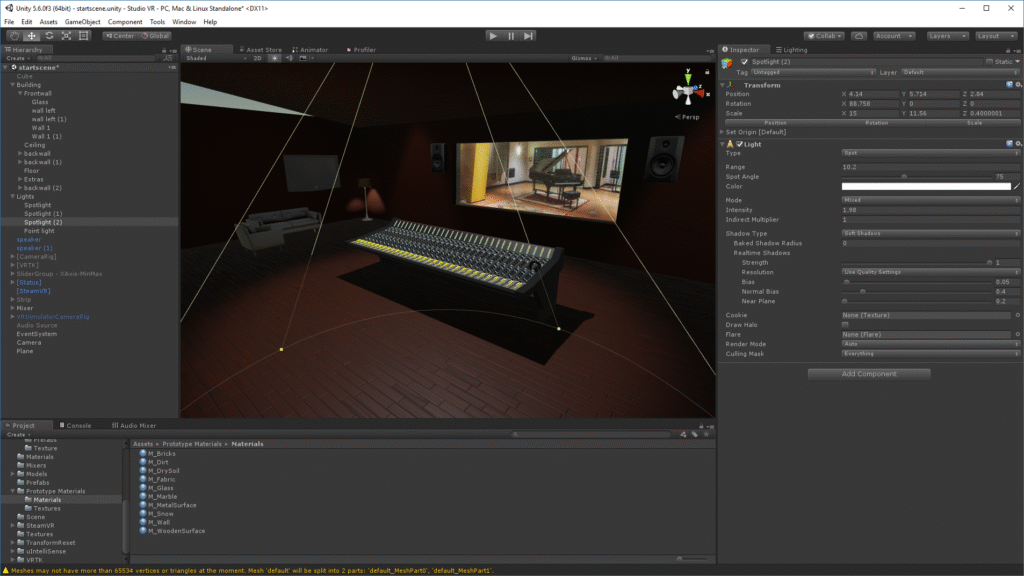

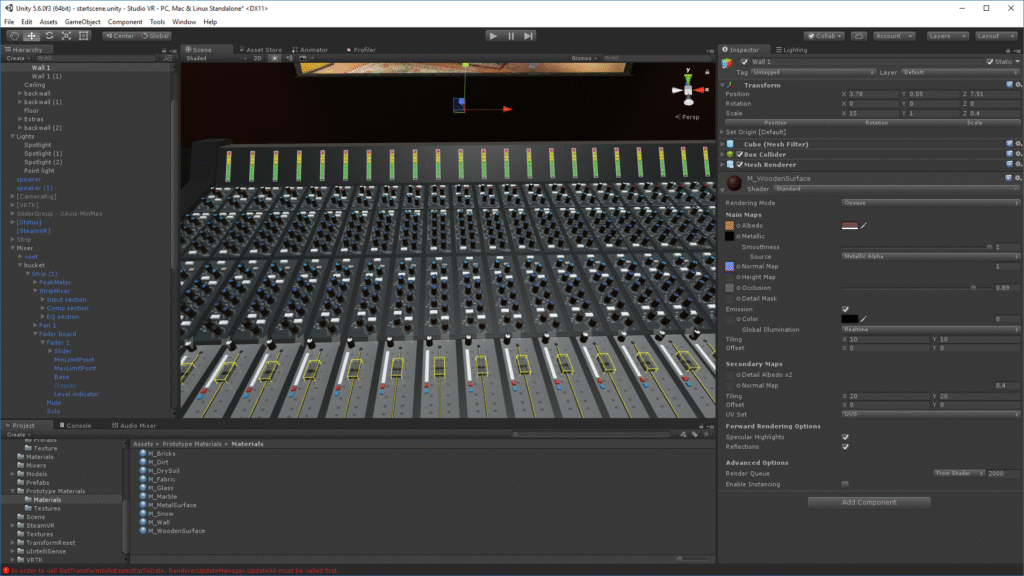

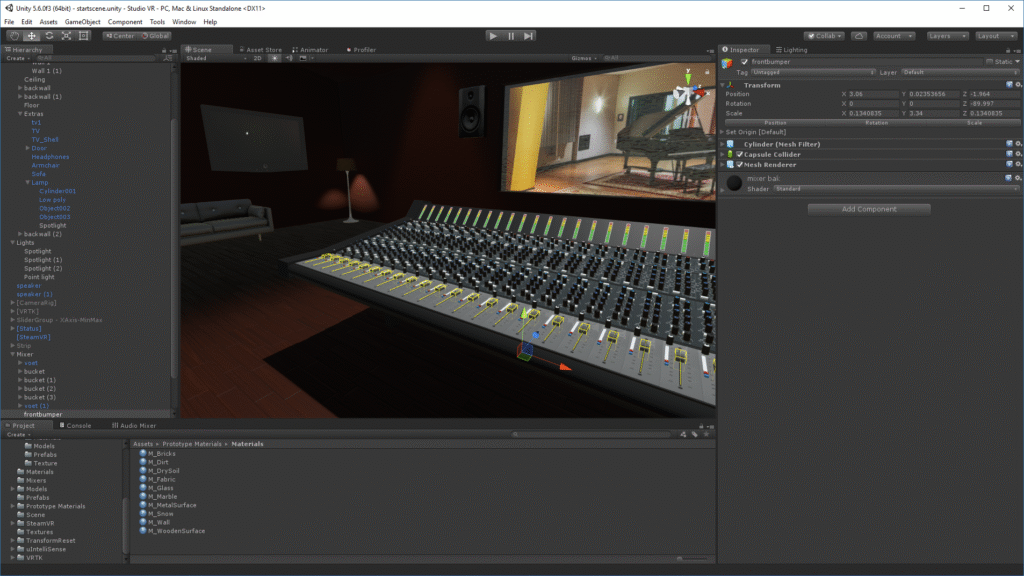

Building the console was the first step, so I started modelling and building it in 3D using pictures and drawings and then programmed it using the manual. It was a lot of work, but after a while, I had the basics of the console working. The level, EQ, and monitoring were all functional, and I had a virtual 24-track multitrack Studer as a wave/MP3 file player.

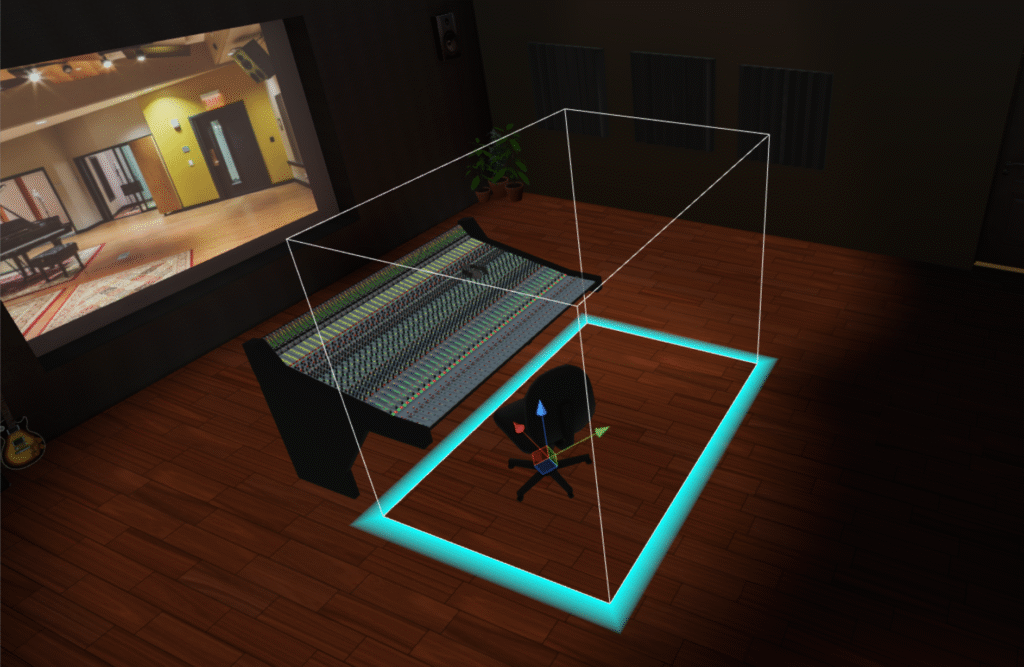

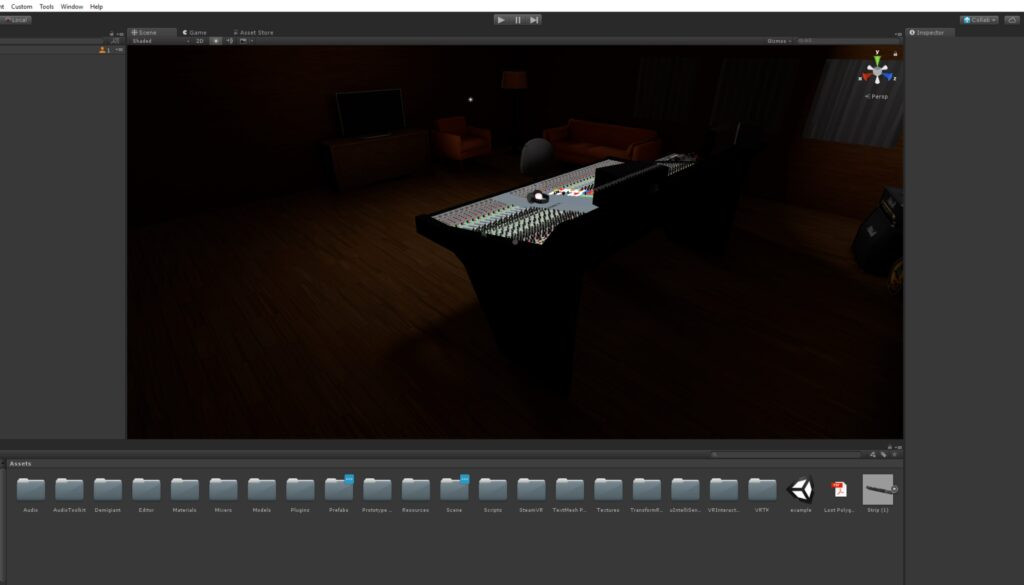

Of course, no recording studio is complete without a control room, so I quickly created a mock-up virtual control room to give a sense of space and scale when in VR. But then came the hard part – the following three challenges during development took the most amount of time:

Challenges of Creating the Virtual Reality Recording Studio

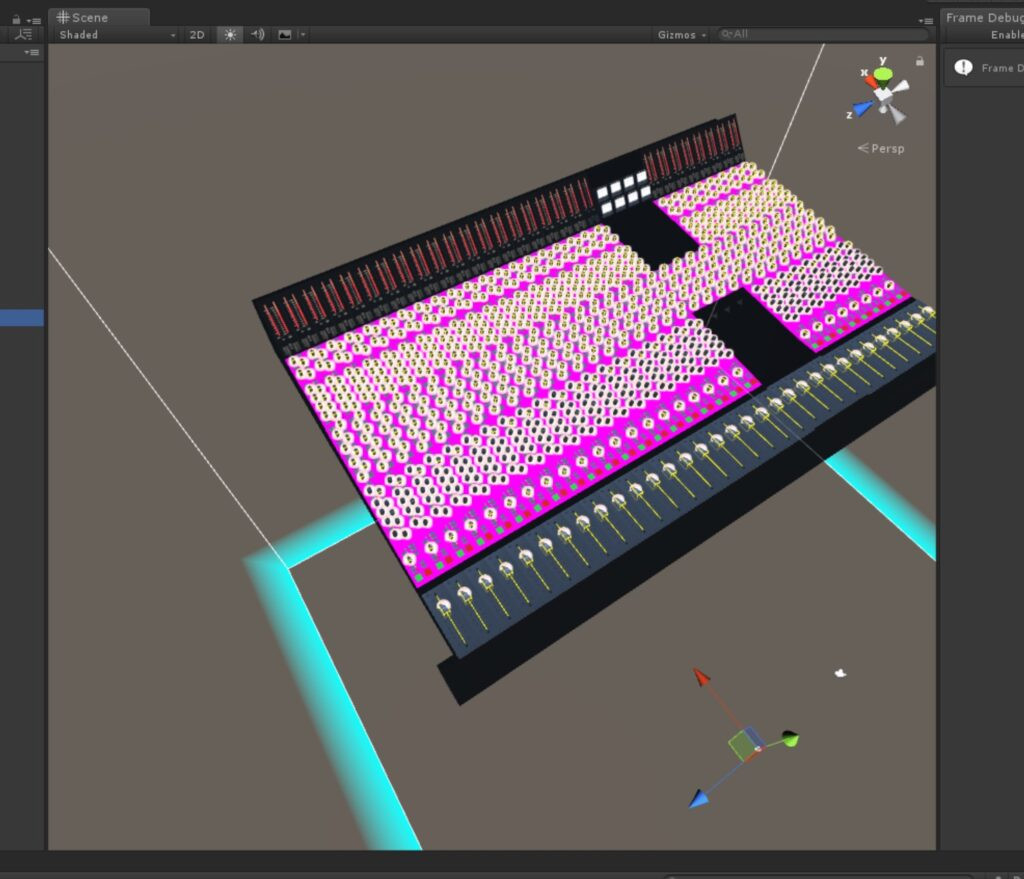

The first challenge of creating the Virtual Reality Recording Studio was a tough one! I had to optimise the graphics and code for VR so that it could handle rendering all the buttons and elements at a minimum of 90 frames per second on two screens. That’s a lot of work, especially with a console that has so many buttons and sliders. But after a lot of experimentation and tweaking, I was able to optimise the 3D models and use instancing and re-texturing objects to get it just right. This is still being optimised in the current versions

The second challenge was all about reducing the audio CPU time. In my initial test in 2015, it was using too much DSP CPU power to be able to expand. So, I decided to learn a bit of C++ and modify the audio plugins for Unity to do parts of the processing in more efficient code, which allowed me to leave enough CPU power for VR. It was a lot of hard work, but I was determined to make it happen.

And finally, the third challenge was developing a natural VR interface to control the small buttons, sliders and pots with VR controllers. This was probably the most difficult challenge of all, as it went through many iterations before settling on the current implementation. I eventually settled on using haptic and visual magnification in conjunction with natural gestures like turning, sliding and pressing. It’s a natural and intuitive way to control the console in VR, and I’m proud of how it turned out.

Research

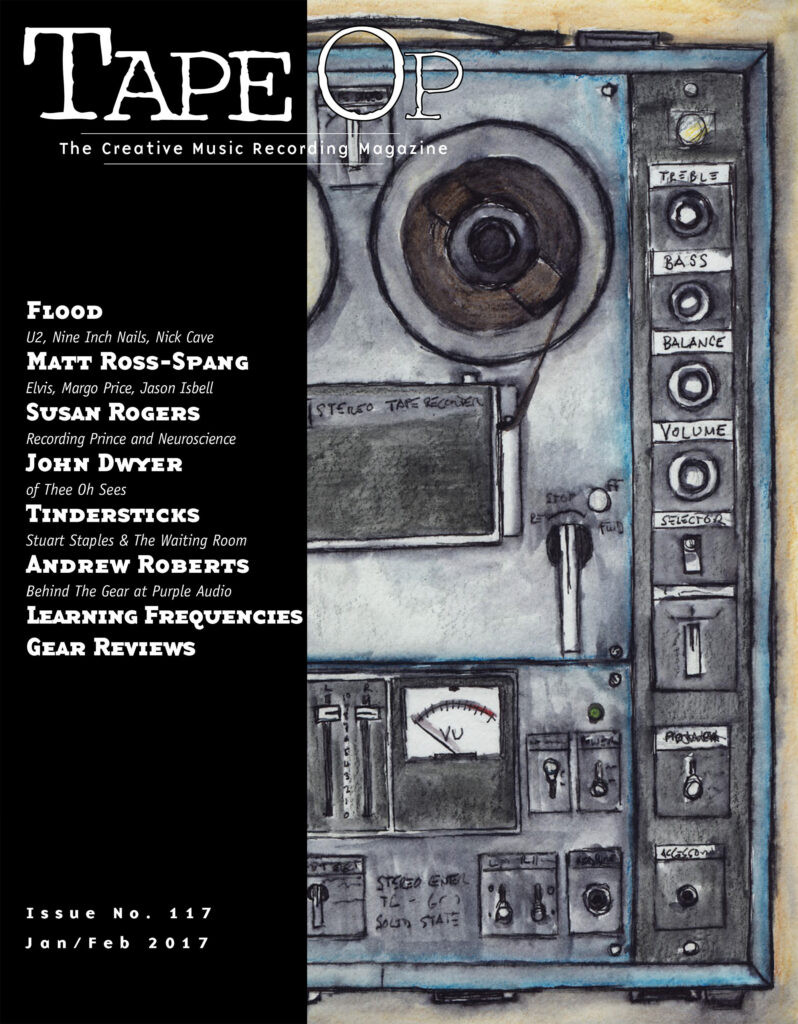

While I was on holiday and browsing the internet, I came across an article in an old tape-op magazine from the audio engineer and author John La Grou. This quite well-known and very capable audio engineer predicted back in 2014 a similar VR application to be used in a studio environment. I contacted him because our goals seemed quite aligned.

Following is an excerpt from his conclusion :

“The future of audio engineering”John la Grou – Tape Op magazine –

By 2050, post houses with giant mixing consoles, racks of outboard hardware and patch panels, video editing suites, box-bound audio monitors, touch screens, hardware input devices, and large acoustic control rooms will become historical curiosities. We will have long ago abandoned the mouse. DAW video screens will be largely obsolete. Save for a quiet cubicle and a comfortable chair, the large, hardware-cluttered “production studio” will be mostly a quaint memory. Real-space physicality (e.g., pro audio gear) will be replaced with increasingly sophisticated head-worn virtuality. Trend charts suggest that by 2050, head-worn audio and visual 3D realism will be virtually indistinguishable from real space.

Microphones, cameras, and other front-end capture devices will become 360-degree spatial devices. Postproduction will routinely mix, edit, sweeten, and master in head-worn immersion. ……. (removed content)

Future recording studios will give us our familiar working tools: mixing consoles, outboard equipment, patch bays, DAW data screens, and boxy audio monitors. The difference is that all of this “equipment” will exist in a virtual space. When we don our VR headgear, everything required for audio or visual production is there “in front of us” with lifelike realism. In the virtual studio, every functional piece of audio gear every knob, fader, switch, screen, waveform, plugin, meter, and patch point will be visible and gesture controllable entirely in an immersive space.

Music post-production will no longer be subject to variable room acoustics. A recording’s spatial and timbral qualities will remain consistent in any studio in the world because the “studio” will be sitting on one’s head. Forget the classic mix room monitor array with big soffits, bookshelves, and Auratones. Headworn A/V allows the audio engineer to emulate and audition virtually any monitor environment, including any known real space or legacy playback system, from any position in any physical room.

You can find the full article in Tape-Op magazine archives, or : https://www.stereophile.com/content/audio-engineering-next-40-years